Michelle Fleming, Chief Legal Officer at Bell Techlogix, Inc., shares a candid perspective on how legal leaders can responsibly embrace AI.

This article was prompted by a question from my co-worker Jayakrishnan Sureshbabu in response to a topic raised by Paul Hermelin, Chairman of the Board at Capgemini. The topic raised was about legal’s view of AI. I started typing a comment that turned into a novel, resulting in this article. Thank you JK and Paul for the springboard to dive into this! From what I’m seeing in the trenches, legal stances on AI are split into three camps.

Open to Learning

There are those who are curious, ask questions, and are open to learning more about how to use AI. For example, someone asked me how I’m making myself comfortable providing information, such as contracts to AI. The answer to that is the same as any other technology. By vetting it. For example, if you use a vendor such as Microsoft (ex. if you use Microsoft Outlook for work) and you’re emailing a contract using it, then you’re providing confidential information to Microsoft.

The California Bar issued Generative AI Practical Guidance that says lawyers have a duty to review security of Gen AI providers just as they do any other provider. The specific guidance is that a lawyer can input confidential information provided that the lawyer does due diligence to ensure that “any AI system in which a lawyer would input confidential information adheres to stringent security, confidentiality, and data retention policies.” Other state bars, including the New York Bar Association and the Florida Bar Association have issued guidelines for Generative AI usage. The American Bar Association (check out ABA Formal Opinion 512 on Generative Artificial Intelligence Tools) has also issued an opinion that aligns with the California Bar’s guidance. So, we vet by looking at the provider’s security and privacy practices. At Bell Techlogix, Inc., I partner with our CTO and CISO on security vetting, for example by seeing if they’re SOC 2 audited, do they train on our data, is our data kept segregated from data of other customers, etc. We also have AI training, AI policies, and AI committees.

Hard No

Then you have the second camp, those who refuse to use it. As an example, another lawyer told me “You’re abdicating your responsibility as a lawyer by using AI.” I think some of this stance comes from our profession being trained to identify and eliminate risk. If you don’t use it at all, then that is one way I suppose you could say you’re eliminating the risk! My view is that most of the time our role isn’t to make things zero risk. It’s to get things to a risk level that is acceptable. Point blank “No” does nothing to advance the ball down the field. It just stops the progress. We shouldn’t be the department that puts the “No” in innovation. We should be the “Department of Know”, educating on how to use AI in a responsible manner. I’ve also found that sometimes those who refuse to use AI to try to make things zero risk are the same ones who heavily mark up contracts. Same as refusing to use AI in an effort to make things zero risk, it does nothing to advance the closure of the contract. The level of mark up can correlate to how long it takes to negotiate the agreement. A heavily marked up agreement can also dilute your negotiating power if you have a ton of revisions versus a few material edits. The same analysis and plan should be done for AI tools. It’s all about identifying the risk (ALL of it), and then making an informed decision on what’s acceptable, what’s not, and how to minimise or eliminate anything that’s too high risk.

Come on in, the Water is Fine

Then…we have our third camp. Those of us lawyers who think AI is the way of the future and are inviting others to ask questions, have a conversation about it, and do educational events for lawyers to learn how to use it. I’m in that camp. Prior to coming to Bell Techlogix, Inc., I was at a company whose flagship product was open source. There was a lot of what we called FUD (Fear, Uncertainty, Doubt) from some third parties who thought if you used any open source, you “infected” your software and would have to make your proprietary code open source. Not at all true. You just have to read and understand the licence of the open source software you choose to use!

I’m seeing a bit of the same FUD with AI as I did with open source software. It’s just about educating yourself and understanding how to use it. The new generation of lawyers is learning to use AI in the law schools and they’re more likely to be faster, more efficient, and produce higher quality product than those of us who have been in the field longer and haven’t been open to using AI. Marcia Narine Weldon, Founding Member of She Leads AI, does an amazing job of teaching on AI, not to just to law students but to us practicing lawyers through her panels and seminars!

“AI can rapidly get through lengthy contracts much faster than a human, but the human still has to be in the driver’s seat.”

I judged a contract drafting competition for a law school a few months ago and as part of that they were allowed (but not required) to use AI in their drafting (so long as the prompts used were disclosed to us). The instructions even gave sample ideas on how to use AI, for example, putting language in AI and asking it to run a hypothetical scenario to see how a potential contract dispute, or event such as force majeure, would play out using the drafted language. Pretty incredible and I borrowed that idea for my own use!

The way I see it AI is like a car. Imagine two people are going to the same destination 3 miles away, but one is walking and one is driving. The person in the driver’s seat is 100% having to control the car – the driver has to know when to stop, turn, slow, speed up, etc. But the one with the car is going to arrive first every time. Same thing is true for AI. AI can rapidly get through lengthy contracts much faster than a human, but the human still has to be in the driver’s seat because they’re trained to spot and navigate legal/contractual issues that the AI may not recognise. Still, the person with the AI will ultimately get through review/revisions faster and with more accuracy than the person without it.

Believing every single contract has to be some uniquely artisan handcrafted contract or you’re not doing your job is counterproductive. It delays producing the contract at best. But… you still have to review the output. I once asked AI to generate a contract for me. It completely missed including a governing law clause. I also asked it to review a third party contract for risks. It missed that indemnity was not limited to third party claims. Those are things that, right now, it takes an experienced lawyer to know to look for and catch. I know AI will only improve and advance though and those of us who leverage it will only improve and advance with it.

I’ve also pushed a traditionally “legal” AI tool (called GC AI ) to go beyond its intended usage of just legal work and do tasks that help us win deals, identify opportunities, and identify cost/profit issues in contracts. It’s a very exciting time.

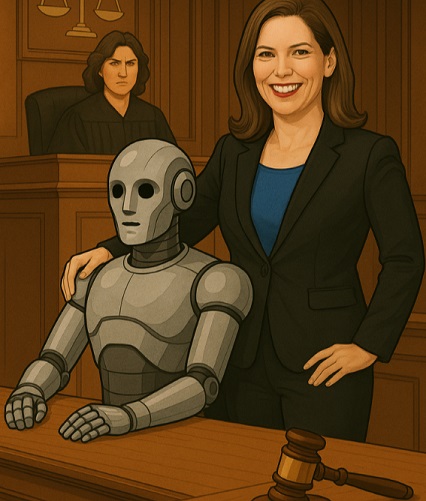

I used AI to suggest the title of the article and create the awesome cover image. That’s supposed to be my friendly face standing at the defence table with my arm around the robot.

Be part of a growing global community committed to advancing in-house legal leadership.

Markus Warmholz, Head of Corporate & International Legal Affairs and Legal Operations at Hartmann Group in Germany, outlines how corporate lawyers can align legal acumen...

Maria Cristina Michelini, Legal Counsel at The Palace Company Europe S.r.l in Italy explores how AI regulation is reshaping corporate compliance – from a reactive...

Chief Legal Officer

Bell Techlogix, Inc

USA